Discover how audio for XR works, and learn how it compares to audio you’ve already experienced in games and interactive media.

To be a game audio designer in the industry these days means having a lot more skills than just creating epic game audio content. It also means that you need to be up on changes and additions to the technology, and being on the lookout for new methods and techniques of immersive audio that can enhance the player’s experience.

So, you’ve likely heard lots of fancy new acronyms like VR, AR, MR, and most recently XR describing the fields of Virtual, Augmented, Mixed and Extended or Expanded Reality (nobody agrees on what XR means currently). And it’s possible you’ve come across articles talking all about audio for these mediums and how it’s completely different from audio you’ve experienced in games or other interactive media.

Well, yes and no. Let’s de-mystify how audio for XR works and how it’s both similar and different to audio you’ve already experienced in interactive media and especially, games.

Let’s get some basic facts out about how XR based audio works:

- Audio for XR is still non-linear audio, at least if you’re talking about interactive experiences or games. So the method of workflow in XR media will be similar to the method you’re already familiar with in game development.

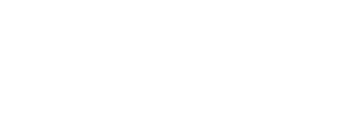

- 3D games already have a very robust (though somewhat less realistic) form of spatial audio for well over two decades. XR games can often just utilize simple 3D spatial audio or even just 2D audio.

- The usage of these new sophisticated technologies is going to depend on whether the game itself will need them. One of the best-selling games on the Oculus Quest Store, Beat Saber, uses mainly 2D audio. Other games use basic 3D audio, and still others use a combination of existing and new 3D techniques.

- Last, and sort of surprisingly, all of the new technologies I’m going to mention can be utilized in non-VR based games. You don’t even need a VR headset to experience this new form of spatialized audio. Actually you don’t even need a game. You can experience it through apps like Dolby’s new Atmos-oriented spatial music tracks which you can experience on AirPods.

All right, now that we’ve laid the groundwork for a bit of what hasn’t changed, let’s talk about the additions to the audio arsenal that have been added. Some of this is also based on functionality in XR-based games and media.

Binaural Based

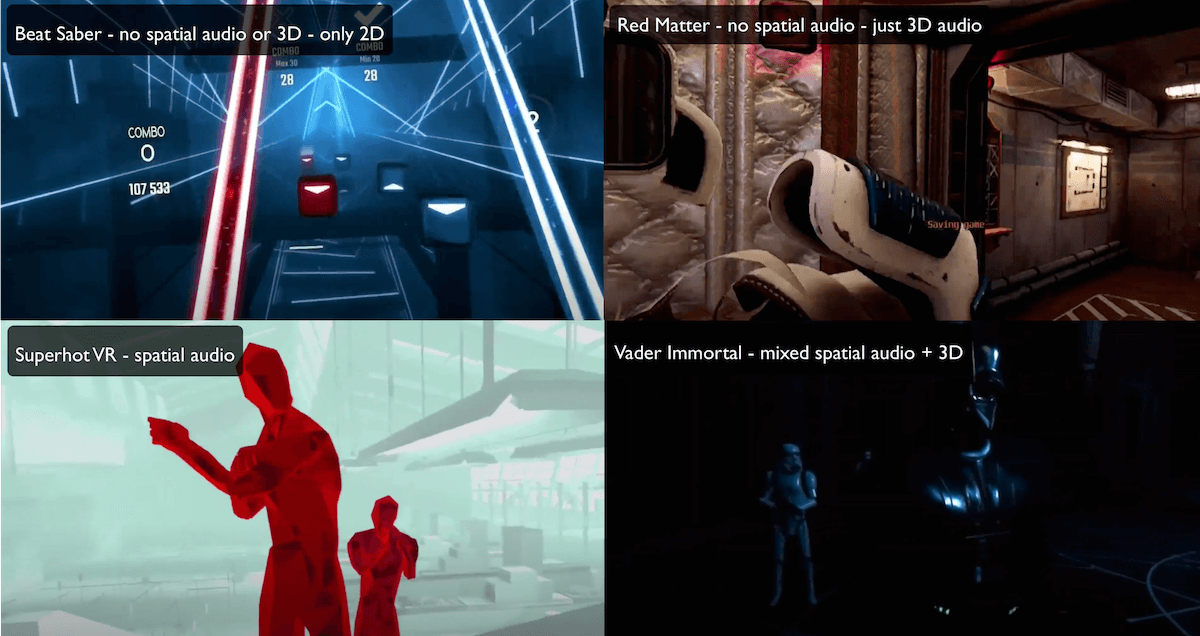

Because XR devices are currently personally oriented, audio is sent to each individual device being used. XR currently doesn’t utilize a multi speaker setup, so the most common situation encountered is small speakers on the device or a separate set of headphones or earphones.

Preparing spatialized audio for headphones requires binaural encoding to be done. So in XR based situations, the spatialized game audio is sent to a binaural decoder or renderer which processes the sounds so they will sound convincing on headphones.

Okay now that we’ve covered our ears, let’s next discuss the head and why it’s significant for XR.

In XR based games and apps there’s commonly more attention paid to what the hands and the head do in the game space. This isn’t as much a concern for traditional 3D FPS games but head rotation in particular is kept track of, and spatial audio relates in one of two ways to this action:

- Head Tracked audio is audio that changes its apparent orientation relative to the head rotation. So, this behavior is similar to the head turning, and making the sound appear to come from the right side.

- Head Locked audio is audio that does not change its orientation relative to the head rotation. This means that the audio will not change and in effect, acts like 2D audio, unaffected by distance or direction.

Now let’s cover the two new spatial audio technologies emerging in XR.

Ambisonic Microphones

Ambisonic Audio

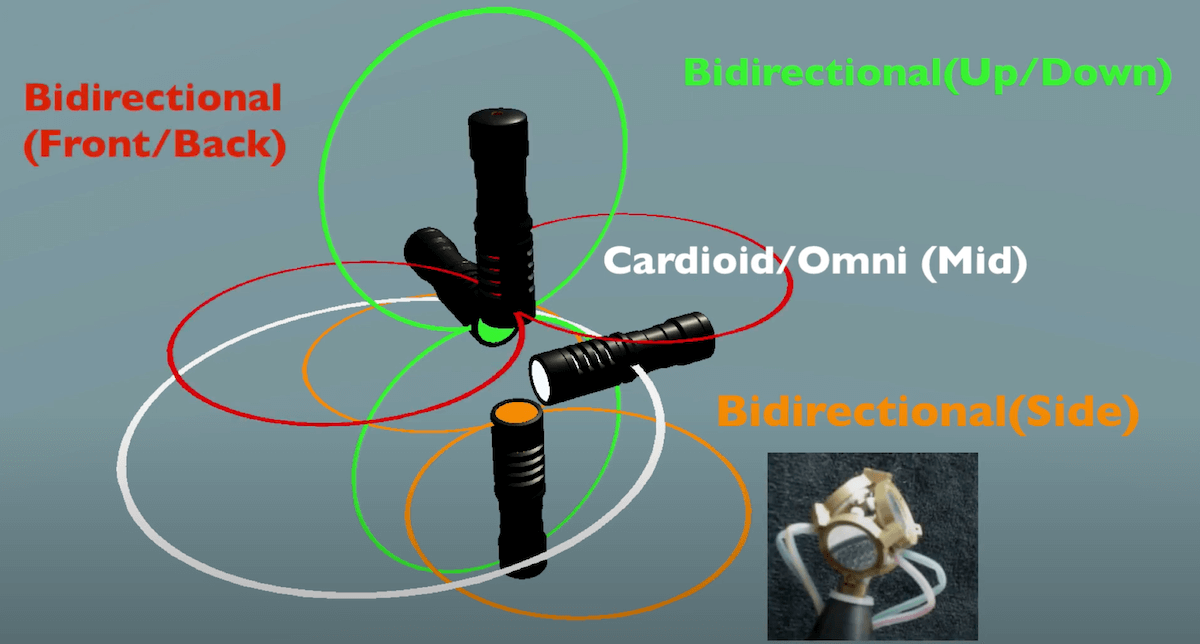

Ambisonic audio is the older of these spatial audio technologies. It was originally adapted from the M/S (Mid Side) miking technique. The Ambisonic audio pioneers added two more microphones, which over time led to the development of the Soundfield microphone with four capsules, and then expansion of the technology to encode recorded audio into the format.

Since the patent expired the four capsule (or tetrahedral) mic also became popular and with several products out there, and it is now the choice for anyone wanting to record audio for 360 film projects.

Ambisonic audio achieves better soundstage accuracy, especially in regards to accurate depiction of height, than standard stereo audio does. The level of detail is governed by the order number, with first order ambisonic files providing the lowest level, and progressing upwards to higher orders, offering greater detail, although at greater computation cost.

Explore Ambisonics libraries from Pro Sound Effects here.

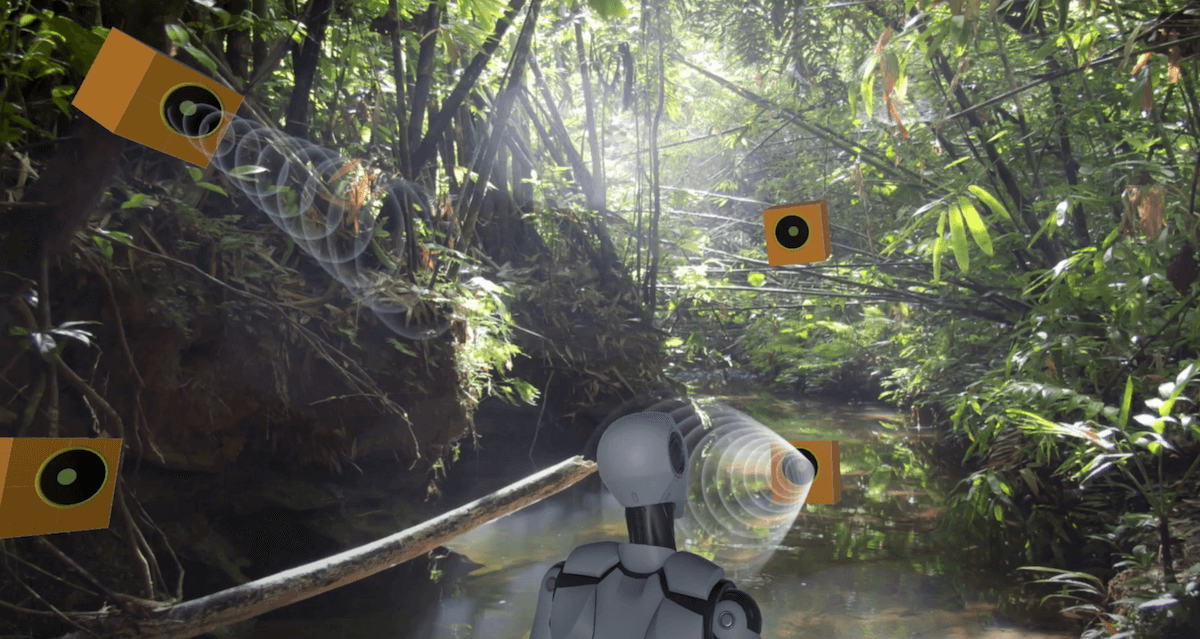

Ambisonic Ambience

Strengths for Game Audio:

- Ambisonic audio was popular in the earliest days of VR (especially phone based VR).

- Great for 360 Film or for audio in which the player’s head position does not change.

- Non-local ambiences can also benefit from ambisonic audio.

- Music can benefit by offering a larger sound stage for your musical parts to live in.

Weaknesses for Game Audio:

- Cannot track position relative to the player. Which means that if an object in a game is playing an ambisonic file from a sound source, moving further away or closer will make no difference to the sound - only rotation of your head will affect it.

- Local spatial sounds using ambisonic files will not be good unless the player is somehow locked into position and can only rotate their head, like for example in a flight simulation situation.

- Ambisonic files of higher orders are not supported, mainly due to the taxing of the processor when decoding them. The Unity and Unreal game engines only support first order ambisonic files.

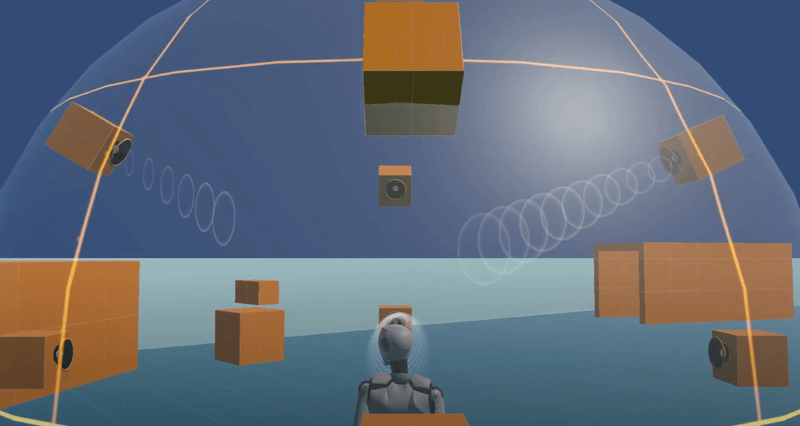

Ambisonic Sphere

HRTF Based Audio

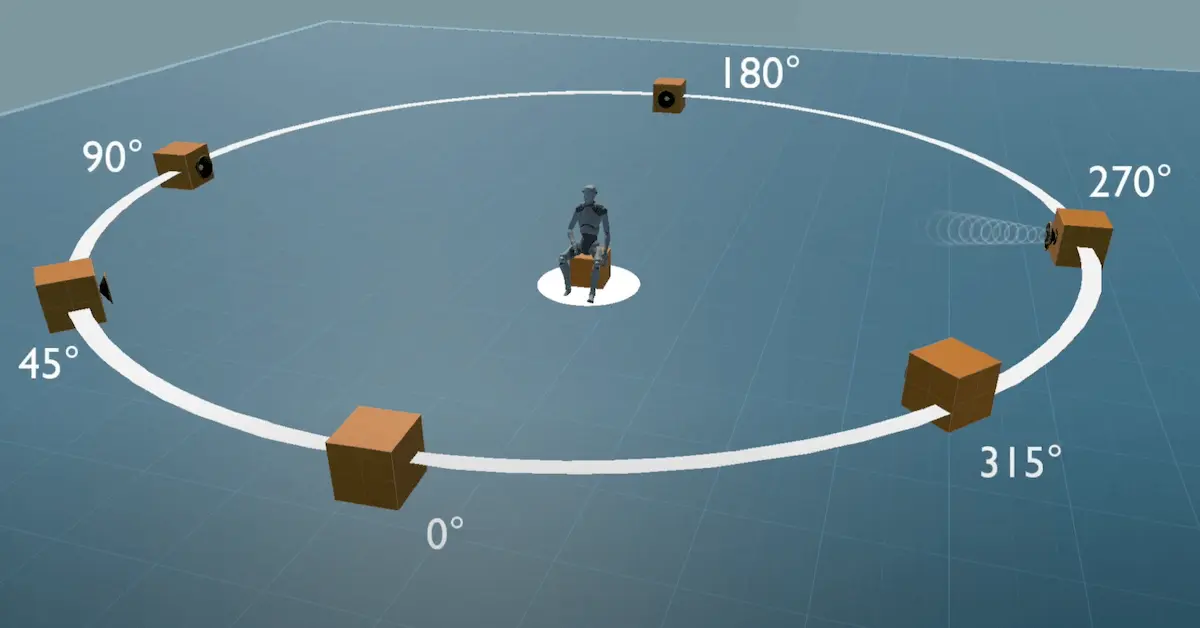

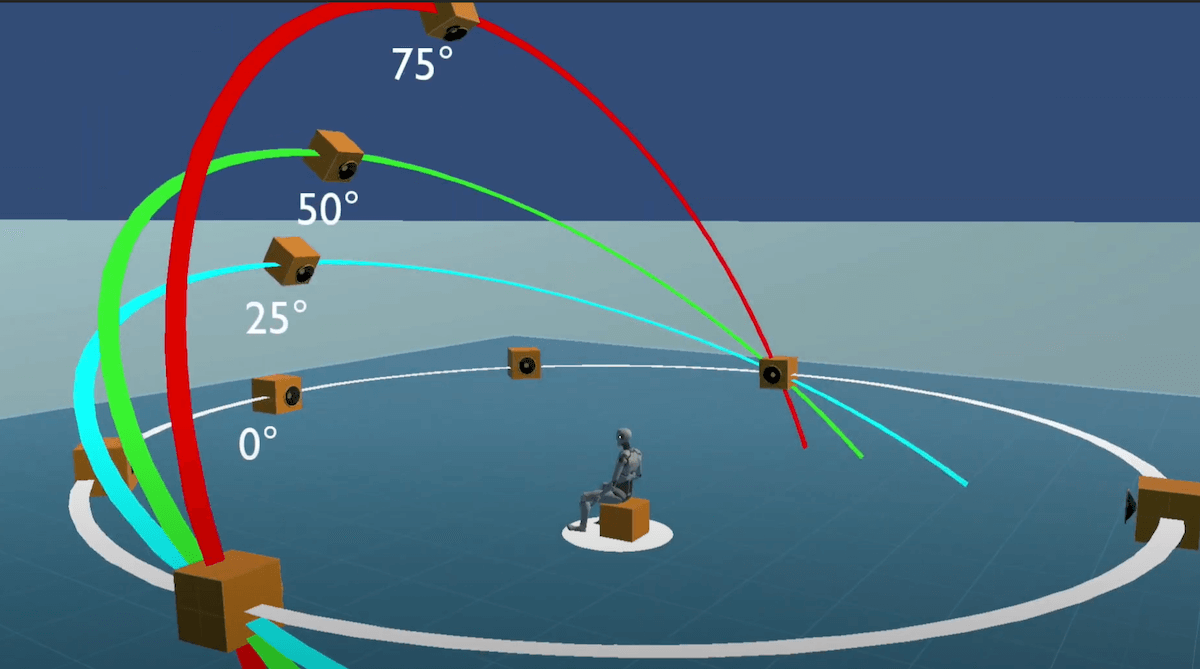

Head Related Transfer Function - this is a form of binaural audio filtering based on a group of subjects who had binaural microphones placed in their ears and were subjected to a battery of tests over the entire frequency range at different angles (azimuth) and at different heights (elevation). The result of these tests is compiled into an Impulse Response filter and then real-time convolution is performed on the signal to make it seem as if the sound is arriving right at your eardrum.

With HRTF, having all of this frequency response data recorded at different elevations, we now have all the benefits of standard binaural audio but with the addition of elevation added to the mix. The data is compiled into an impulse response (HRIR), then convolution is performed on the original signal and the sound is modified or filtered to sound like it’s coming directly at our ears.

Strengths for Game Audio:

- HRTF is definitely an excellent audio technology that can take any 3D audio source and make it seem like the sound is coming from anywhere within a 180 hemisphere surrounding the player.

- It is also not subject to the limitations of ambisonic audio in that head position (distance) is also tracked in addition to rotation (direction). And even today’s mobile devices like the Quest headsets, can compute several sound sources at once without excessive processor usage.

Weaknesses for Game Audio:

- You are limited to some extent by the platform’s processing ability to have a large amount of HRTF based audio sources present.

- It may not be the best sounding solution for all kinds of 3D games.

HRTF Elevation

Conclusion

Although both of these new spatial audio technologies can help sound designers in particular achieve more immersive results in their game’s audio, they should not be thought of as replacements for current game audio, but rather considered as an extension to the current audio palette. Instead professional designers should always be focusing on what serves the game best, and what can also be managed in terms of pipeline production for the developer or publisher.

Hope this article was helpful to you! We’ve just scratched the surface on XR audio in game engines. Again, if you’d like to learn more, consider taking the Game Audio Institute course in audio for XR. We only admit 10 students, and right now GAI is offering 20% off with the code gai-vr2022-20 at checkout. Go here for more information.

Scott Looney is a passionate artist, soundsmith, educator, and curriculum developer who has been helping students understand the basic concepts and practices behind the creation of content for interactive media and games for over fifteen years. He pioneered interactive online audio courses for the Academy Of Art University, and has also taught at Ex’pression College, Cogswell College, Pyramind Training, UC SantaCruz, City College SF, and SF State University.

Scott Looney is a passionate artist, soundsmith, educator, and curriculum developer who has been helping students understand the basic concepts and practices behind the creation of content for interactive media and games for over fifteen years. He pioneered interactive online audio courses for the Academy Of Art University, and has also taught at Ex’pression College, Cogswell College, Pyramind Training, UC SantaCruz, City College SF, and SF State University.