Dear Reality co-founder, Christian Sander, discusses dearVR Spatial Connect - the new VR audio mixing tool that promises to revolutionize 3D audio workflow.

Even as 3D audio technology has advanced in recent years, the disconnect between DAW and VR engines has remained a pain point for sound designers working in immersive media. This gap in the workflow results in less time working creatively and more time problem solving.

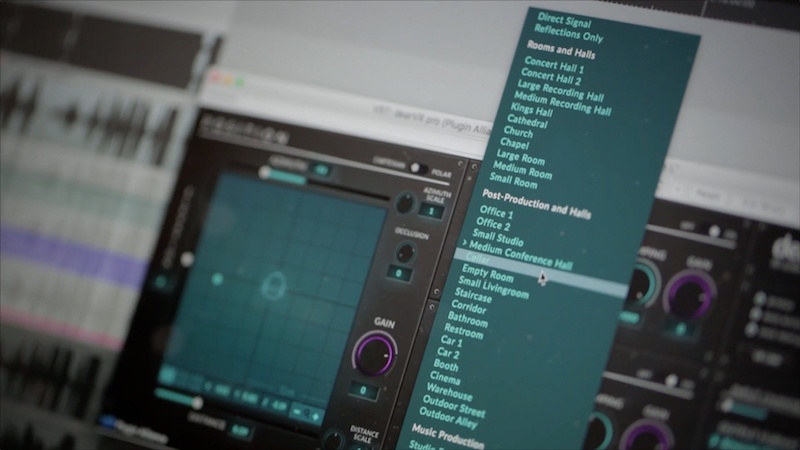

German tech startup Dear Reality has created the tool to bring these worlds together with dearVR Spatial Connect - a novel workflow that integrates with any DAW enabling intuitive positioning and mixing of sound objects directly with VR controllers. Simply put, it lets you mix 3D audio for VR in VR.

Read our in-depth conversation with with co-founder Christian Sander about Dear Reality’s origins, the tech behind Spatial Connect, the importance of HRTFs, managing output formats, platform independence and more.

Interview by Travis Fodor

Travis Fodor: What is the background of Dear Reality?

Christian Sander: We founded Dear Reality over three ago with the mission to create both 3D audio technology and audio games. I met co-founder Achim Fell in 2012 when he was searching for binaural rendering software on mobile devices and found a paper I presented at AES in San Francisco. He's also sound engineer but comes more from the game design perspective. I'm a sound engineer as well, but also a software developer.

Christian Sander: We founded Dear Reality over three ago with the mission to create both 3D audio technology and audio games. I met co-founder Achim Fell in 2012 when he was searching for binaural rendering software on mobile devices and found a paper I presented at AES in San Francisco. He's also sound engineer but comes more from the game design perspective. I'm a sound engineer as well, but also a software developer.

The first year we started, we had a huge contract for one of Germany’s biggest broadcasting companies to create an interactive 3D audio game for mobile - like a movie only for your ears. For that project we created an early version of the dearVR engine for Unity for in-house use. It was very successful, though only for the German market, so we started to focus on the technology to create 3D audio tools for anyone who wants to create experiences.

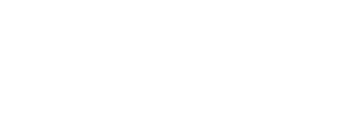

In 2015 we started focusing on the dearVR 3D audio reality engine which enables ultra-realistic acoustic virtualization for audio production. We released that for Unity at SXSW 2016, and soon we’re releasing VST/AAX/AU versions in addition to the Spatial Connect workstation.

What inspired you to create the dearVR Spatial Connect workstation?

We’ve done a lot of VR sound design for various projects over the years, and we always had the same problems bouncing between environments. We had our own dearVR VST plugin, and we had the dearVR Unity plugin, and mixing for that meant trying it out on the DAW. It never fit perfectly, and you couldn’t change everything at once, or rotating your head wasn't working in the early VST plugin. There was so much adjusting back and forth. We needed a workflow to really hear the mix within the DAW as sounds in the VR experience.

That gave us the idea to create a pipeline that’s completely connected to your DAW - with our VST plugin, wearing the VR headset and mixing in VR, but still being able make changes with the freedom of a DAW and all the plugins you’re used to. We wanted to export the whole mix to Unity - with all the audio files and the full object-based mix.

Dear Reality Founders: Achim Fell (left) & Christian Sander (right)

Dear Reality Founders: Achim Fell (left) & Christian Sander (right)

Do you collaborate with sound designers to get feedback on what they want?

Yes, we have one in-house and several others we’ve worked with such as DELTA Soundworks, the VR sound design company featured in the video. We met through Creative Pilots - an award for companies in the creative industry from the German government - and realized we both wanted the same tool. The feedback we receive has been very important and useful, and we’re always open to more.

I truly believe in what your company is doing, but hypothetically, why would a sound designer want to learn how to do what they’ve already been doing, but in a virtual space? Is the effort of learning these new interfaces just making it harder to do what we’re already good at?

Most sound engineers, musicians or sound designers are already learning a new workflow with VR. You start with the VST plugins, drawing the position on their screen in 2D, and you learn that it’s not very intuitive. You can’t move your head around, and you don’t know what works until you check with the headset on. All of that needs to take place in real-time, and it has to take place within the same medium.

For example, when you mix for a movie theater, you essentially do the final mix in a movie theater. If you want to mix for VR, then you should do the final mix in VR. Wearing a headset, having head tracking, changing distance and acoustics while hearing how other sources behave, and viewing the mix all at once makes it so much easier. Companies like G'Audio and Oculus Spatialization are making great tools to help see all the sources at the same time, but we really wanted to move inside the mix. The important thing is to be able to really check the mix any time and make adjustments instantly.

You have to learn, but it's not that hard. It's moving your hands around, not flying a jet. You have two controllers, and you can grab the object, so it’s very intuitive. You don't have to learn everything else because you already know how to work in your DAW and it’s still connected. So it's not a completely new workflow - it's more like an addition to properly work in VR.

Do you see any issues across different hardware and platforms? For example, if you're working on an Android app, you might not want the reverb exposed if won’t be using the reverb engine on the phone. Do you see any separation there in terms of implementation?

Spatial Connect is running on Vive and Oculus. The final mix coming from Spatial Connect and being sent to Unity can be implemented on any platform (OSX, Win, Android, iOS) with our dearVR Unity Asset.

So on Android, if we say low-end S7 Samsung Gear VR, then I know if I set the buffer size not too low, and I know can have at least 10 sound sources running plus one reverberation bus. If I know that, then I have to work with that. If I want to change the reverberation, I have to switch it on the same dearVR reverb bus, and then it's working fine.

Something to keep in mind as a VR sound designer or engineer is that Gear VR is the most common platform at the moment - and also the least performing for audio, which is a pity. But you can always scale up and down. If I need 20 sources on the Gear VR at the same time, and if I don't want to do pooling (sharing the sources of different sounds), then I can't use the reverberation.

I know HRTF is a bit of a buzzword these days, but how are you addressing the fact that some HRTFs sound better to some people than others? Do you offer any HRTF implementations through Unity by swapping out SDKs, or are you working with your own HRTF?

HRTF is very a important field because it's the base technology of all the engines out there. We don’t yet offer outside implementation because we have very good HRTFs. Unfortunately I can't tell you which ones, or how we generate them, but that's definitely the key. We get great feedback on them - they have very good out-of-head localization and positioning, especially from above and below, which is important.

We put a lot of effort into optimizing our HRTFs, and it’s been an interesting process. There are so many improvements you learn just from experience that you can’t read about in papers. A lot of the psychoacoustic aspects are especially hard to cover. You have to combine your own experience, plus trial and error, and mix that up with all the research going on right now. That’s all part of the optimization process.

I really like that HRTF has become a buzzword, because more people are aware of it! I don't have to explain how it's simulating each position and all that anymore. I think that any 3D audio coverage in the media, even for our competitors, is good for the whole industry. When Valve acquired Impulsonic and released the Steam Audio SDK (formerly Phonon) in March, we had the highest sales for our dearVR Unity plugin that month. We do have a different approach that’s more focused on presets for sound design, because that's our background, but I think any attention on 3D audio is good for the entire industry.

How are you handling all the different output formats out there? I think Facebook 360 workstation, for example, is doing really great work simplifying the pipeline for different output formats.

Output formats are, of course, also very important. That’s something we’re tackling in our upcoming VST plugin. At the beginning it was only binaural output. Last year when YouTube announced they would cover first-order Ambisonics for 360 video, it was clear to us that it was going to become a standard because you don't need the metadata. Even though it's not the best quality (since it's scene-based audio rather than object-based) we knew we had to support that and let the user output whatever format they need - whether you want to export it for a video for YouTube 360 or Facebook 360, or export the mix to Unity, or both.

The version that is coming out will cover binaural output, but more for playback reasons, plus first-order, second-order, and third-order Ambisonics, as well as ambiX and FuMa. We also have a loudspeaker output so you can switch even if you've done the mix for binaural rendering. That was a very important feature that came to us from the Unity gaming world because sound designers don’t know if the user is using headphones or speakers. You can get that information from the device, and switch a flag on all Unity assets so the same mix is still working and the reverberation is working similarly for loudspeakers without doing a new mix.

It’s exciting that you’re focusing on specific problems from an audio person’s perspective that we haven't seen yet. I see a lot of software companies pursuing VR that only have one audio guy as an afterthought, whereas you have audio people actually designing the audio software.

These are the people we want to address because they know how to work with audio. You can't just tell any game developer, "Hey, can you do the audio, too?" They would say, "Ok, let me activate 3D audio in Unity," or "Let me activate the interior Oculus spatializer SDK." There is a lot of knowledge you need to optimize it, so that should be done by someone in the audio field.

Since we have backgrounds in sound engineering and sound design, we exactly know what content creators need. Combined with our highly skilled devs in the team we’re able to create unique 3D audio software technology and tools from content creators for content creators.

What is your perspective on the division we’re seeing with DAW integration between Pro Tools and Reaper? It seems like Spatial Connect could determine the new standard by dominating Unity/DAW integration.

Our philosophy is that we want to be platform-independent. We want to have it on all devices and operating systems, so we’re testing on Oculus, Vive, Windows, OSX, iOS, Android... We also tested on Linux, but didn't release it for that yet. We’re releasing a VST/AAX plugin soon which will be the DAW part of the dearVR Spatial Connect software, so we're testing on many DAWs at the moment.

We mainly use Reaper ourselves, but we all shift. I started with Cubase 18 years ago, and our co-founder Achim worked with Pro Tools, but we all found a great mix with Reaper. Developing VST plugins on Reaper is so fast - it's a very good tool, so that's why it's becoming so huge. There are so many updates and so many features coming out - you can plug in anything to anything, and do a lot of things wrong if you want to. And if I'm an expert, I want to do things wrong.

Like you said, we want to be the ones to bridge the gap between DAWs and Unity and be accepted in the market. That was the workflow we always wanted, and then we just created it. We started taking screenshots of the VST version and then set all the parameters in the Unity version manually. It was really a lot of work back then, but now all the metadata is being transferred to Unity with Spatial Connect and it sounds exactly the same as in the DAW.

It's a lot of fun, and you save so much time by listening and moving the sources at the same time and mixing with two controllers. And the feedback has been great. We’ve had a lot of shares on social media, and, for example, people in the Spatial Audio Group on Facebook saying, "Oh, finally, thank God." So we were not the only ones who had that problem and were waiting for this solution. We get a lot of exciting feedback and are open for sound designers to participate in the upcoming beta testing of Spatial Connect. They just need to sign up for our newsletter to stay tuned!

Follow Dear Reality: Website | Twitter | Facebook | Unity Asset Store

Thanks to Travis Fodor for conducting the interview!

Thanks to Travis Fodor for conducting the interview!

Travis is a sound designer, audio engineer, composer, and musician with a passion for VR and immersive experiences.

Follow Travis: Twitter | LinkedIn

-